Kubernetes K8S使用IPVS代理模式,当Service的类型为ClusterIP时,如何处理访问service却不能访问后端pod的情况。

背景现象

Kubernetes K8S使用IPVS代理模式,当Service的类型为ClusterIP时,出现访问service却不能访问后端pod的情况。

主机配置规划

| 服务器名称(hostname) | 系统版本 | 配置 | 内网IP | 外网IP(模拟) |

|---|---|---|---|---|

| k8s-master | CentOS7.7 | 2C/4G/20G | 172.16.1.110 | 10.0.0.110 |

| k8s-node01 | CentOS7.7 | 2C/4G/20G | 172.16.1.111 | 10.0.0.111 |

| k8s-node02 | CentOS7.7 | 2C/4G/20G | 172.16.1.112 | 10.0.0.112 |

场景复现

Deployment的yaml信息

yaml文件

1 | [root@k8s-master service]# pwd |

启动Deployment并查看状态

1 | [root@k8s-master service]# kubectl apply -f myapp-deploy.yaml |

curl访问

1 | [root@k8s-master service]# curl 10.244.2.111/hostname.html |

Service的ClusterIP类型信息

yaml文件

1 | [root@k8s-master service]# pwd |

启动Service并查看状态

1 | [root@k8s-master service]# kubectl apply -f myapp-svc-ClusterIP.yaml |

查看ipvs信息

1 | [root@k8s-master service]# ipvsadm -Ln |

由此可见,正常情况下:当我们访问Service时,访问链路是能够传递到后端的Pod并返回信息。

Curl访问结果

直接访问Pod,如下所示是能够正常访问的。

1 | [root@k8s-master service]# curl 10.244.2.111/hostname.html |

但通过Service访问结果异常,信息如下。

1 | [root@k8s-master service]# curl 10.102.246.104:8080 |

处理过程

抓包核实

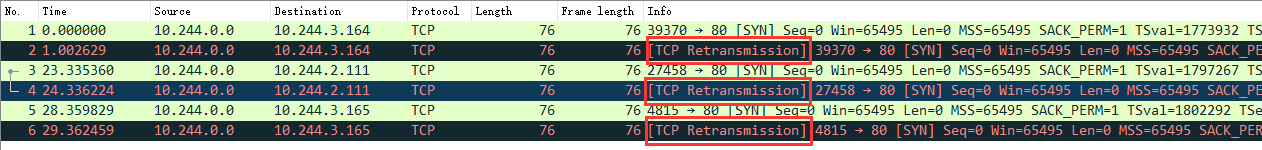

使用如下命令进行抓包,并通过Wireshark工具进行分析。

1 | tcpdump -i any -n -nn port 80 -w ./$(date +%Y%m%d%H%M%S).pcap |

结果如下图:

可见,已经向Pod发了请求,但是没有得到回复。结果TCP又重传了【TCP Retransmission】。

查看kube-proxy日志

1 | [root@k8s-master service]# kubectl get pod -A | grep 'kube-proxy' |

可见kube-proxy日志无异常

网卡设置并修改

备注:在k8s-master节点操作的

之后进一步搜索表明,这可能是由于“Checksum offloading” 造成的。信息如下:

1 | [root@k8s-master service]# ethtool -k flannel.1 | grep checksum |

flannel的网络设置将发送端的checksum打开了,而实际应该关闭,从而让物理网卡校验。操作如下:

1 | # 临时关闭操作 |

当然上述操作只能临时生效。机器重启后flannel虚拟网卡还会开启Checksum校验。

之后我们再次curl尝试

1 | [root@k8s-master ~]# curl 10.102.246.104:8080 |

由上可见,能够正常访问了。

永久关闭flannel网卡发送校验

备注:所有机器都操作

使用以下代码创建服务

1 | [root@k8s-node02 ~]# cat /etc/systemd/system/k8s-flannel-tx-checksum-off.service |

开机自启动,并启动服务

1 | systemctl enable k8s-flannel-tx-checksum-off |

相关阅读

1、关于k8s的ipvs转发svc服务访问慢的问题分析(一)

2、Kubernetes + Flannel: UDP packets dropped for wrong checksum – Workaround